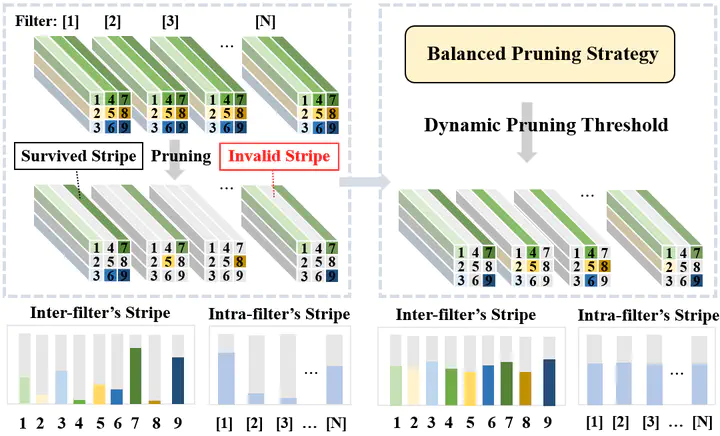

Balanced Stripe-wise Pruning in the Filter

示意图

示意图

摘要

Neural network pruning offers a promising prospect to compress and accelerate modern deep convolution networks. The stripe-wise pruning method with a finer granularity than traditional methods has become the focus of research. Inspired by the previous work, a new balanced stripe-wise pruning, including the balanced pruning strategy and dynamic pruning threshold, is proposed to achieve higher performance. Specifically, the survived inter-filter stripes and the intra-filter stripes are redistributed by a balanced pruning strategy. Meanwhile the dynamic pruning threshold method makes survival rates will be further balanced across all layers. Comprehensive experiments are conducted on two public datasets (CIFAR-10 and TinyImageNet-200) for different models (ResNet and VGG). The experimental results show that the proposed model is capable of reducing the most parameters, yet achieving the highest accuracy. Our code is available at: https://github.com/ajdt1111/BSWP.