Reweighted Dynamic Group Convolution

基本结构

基本结构

摘要

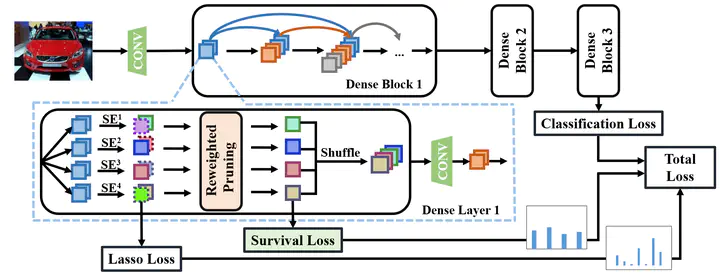

Computational efficiency of modern deep convolutional networks is always desired in real world applications. Various types of group convolutions have been widely used to reduce the complexity of the networks. Inspired by the previous work, a new reweighted dynamic group convolution (RDGC) structure, including a reweighted pruning module and a survival loss, is proposed in this work for more precise channel pruning. Specifically, a layer-wise pruning rate adjustment strategy is employed with an intra-cluster loss to guide the learning of self-attention implicitly. The proposed model is more capable to select the suitable channels to construct the filter groups. Its effectiveness is demonstrated by extensive experiments on four public datasets, namely CIFAR-10, CIFAR-100 and two fine-grained image datasets (Stanford Dogs and Stanford Cars).